Introduction & Motivation

In the fast-paced world of software development, tools that increase productivity are worth their weight in gold. GitHub Copilot has attracted a lot of attention as an AI-powered code generator and assistant. To measure its true value, we put CoPilot under the microscope in an experiment. Our goal was to find out if and how CoPilot can improve our development processes and, most importantly, productivity. In this post, I share detailed results and interpretations from this experiment.

What is GitHub Copilot

GitHub Copilot is an AI-powered development tool developed by GitHub and OpenAI. It is an AI code completion tool that helps developers write code faster by automatically generating code suggestions. Copilot was first announced by GitHub and OpenAI in June 2021.

The way GitHub Copilot works is based on GPT (Generative Pre-trained Transformer) technology, specifically OpenAI's GPT-3 architecture. Copilot analyzes the context of the code written by the developer and then automatically suggests code fragments that meet the desired requirements.

Developers can use GitHub Copilot as an extension in their favorite integrated development environment (IDE). It supports a variety of programming languages and is designed to help developers work more productively by providing them with a kind of "collaborative" approach to code creation.

The framework parameters

CoPilot version and integration:

We used Github Copilot Business as a plugin in PHP Storm.

CoPilot features

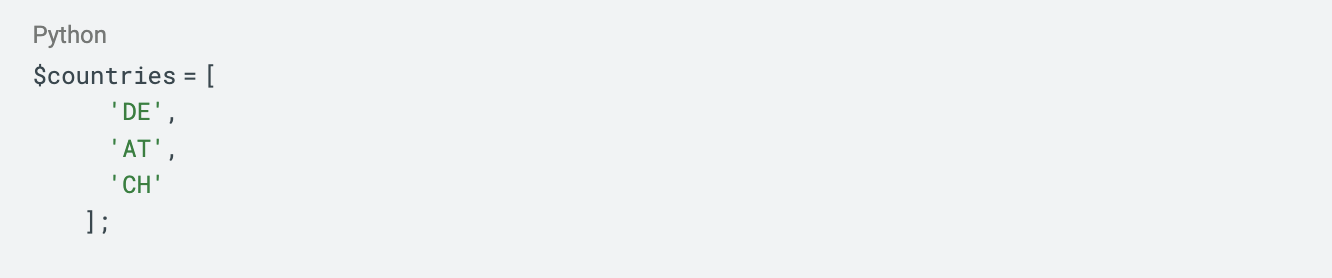

1. AI-assisted autocompletion when typing.

Input:

Issue:

2. AI-supported autocompletion with the use of comments

Here is a simple example. However, you can also use the comment function to generate entire classes, which can then be used as the first construct for the final code.

Input:

Issue:

The team

Our team receives a license for GitHub Copilot for each of the 5 developers. During the period of the experiment (1.07.2023 - 31.01.2024), the team included both juniors and seniors.

Each developer informed him/herself about how to work with Copilot.

- Project and team context: The team has used Copilot in active project business for 2-3 projects and has been working with Kanban for years. During the experiment phase, regular retrospectives were made, which led to further experiments in parallel to this one.

- Criteria for success: Thesis: If Copilot achieves an improvement of 10% in the following metrics, we consider the experiment to be successful:

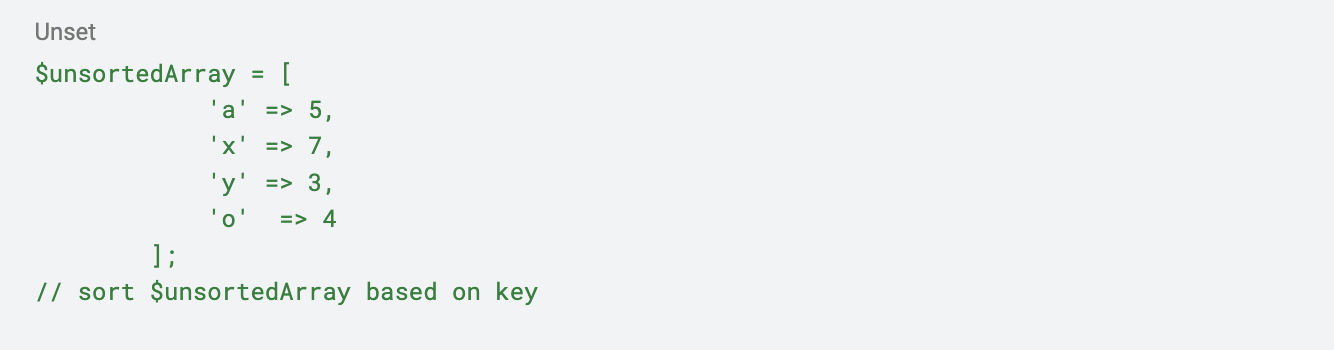

- Lead time: for the lead time metric, we only evaluated the time period that Copilot has an impact on. Specifically, we measured the time (processing + idle time) that a ticket takes from the start of development until local testing by a second person was successful. The times for deployment and further testing by developers, POs and customers are therefore not taken into account.

We have selected stories as the measured ticket types, as these involve the most programming effort. The following graphic shows the TechDivision Lead Times Dashboard with exemplary data:

- Reduction of the average processing time of a ticket per ticket type: This metric is intended to determine the effort per ticket per ticket type in order to check whether there has been a change. Only the developers' worklogs for tickets with the status "In Progress" were considered here.

- Ticket rejection/rejection rate: This metric is an attempt to measure whether Copilot has an impact on code quality. Rejection is measured when a ticket is rejected from the second dev test or by the customer. As an additional metric, we have included the occurrence of blocker tickets. A blocker ticket occurs when an error occurs on a live system that prevents users from using the store normally. Such critical problems are, in particular, incorrect prices or a non-functioning check-out.

- Subjective opinion of the developers: In order to check whether the tool was popular and perceived as helpful and productivity-enhancing, each developer had to rate their experience on a daily basis from 1 to 4 (1 = very negative, 4 = very positive).

Concrete results

For the measurements, the two seven-month periods 1.07.2023 - 31.01.2024 and 1.12.2022 - 30.06.2023 were compared.

Lead time and ticket duration

The lead time for stories has increased by 2 days, which corresponds to a deterioration of 9.5 %. In addition, the average duration of a ticket shows no improvement, which indicates that Copilot was unable to increase our efficiency in these areas.

Rejection rate and blocker tickets

Interestingly, the rejection rate of tickets in second dev testing increased significantly after the introduction of Copilot - from 17.79% to 26.69%. In addition, the rejection rate for customer testing also increased from 3.36% to 9.65%.

However, this is most likely due to another experiment that started almost simultaneously with this experiment. Quality controls were improved and more precise testing procedures were introduced within the team and with our customers.

Furthermore, the proportion of tickets with the priority "Blocker" remained almost unchanged with a minimal decrease from 4.72% to 4.63%.

Subjective evaluations

Developers pointed out the usefulness of Copilot for repetitive tasks and the simple implementation of native PHP functions. Criticism was mainly directed at the accuracy of more complex code suggestions and the potential distraction of inappropriate suggestions.

In general, the average score of the daily survey was 3.48. This can be seen as very positive.

Interpretation of the results

When evaluating the metrics, it was noticeable how difficult it is to draw conclusions about the experiment with GitHub Copilot from the metrics. In the active project business, there are many influencing factors that make it difficult to evaluate the metrics. Changes in team size or ticket volume are just a few of many of these influencing factors.

Although the developers were very satisfied with Copilot, the results of the measured metrics initially indicate a deterioration in productivity and quality. However, there is another aspect that only became apparent when evaluating the metrics, namely what exactly Copilot has an impact on: Writing code.

The test subjects only actually wrote between 2% and 12% of the time spent on a ticket. Writing code is defined here as pure typing work, as the current version of Copilot as an AI-supported autocompleter almost exclusively supports this. The remaining 90% is the time that a developer needs to understand the requirements of the ticket and research how these need to be implemented.

We interpret the finding that Copilot mainly affects the small proportion of approx. 2-12% to mean that the influence of Copilot is simply too small to be visible in the metrics.

Conclusion

The experiment has shown that the use of AI-supported code completion alone has almost no measurable influence on software development in the project business. The metrics we originally envisaged turned out to be unsuitable, meaning that conclusions about the added value of GitHub Copilot in the functionality used can unfortunately only be drawn to a very limited extent.

Outlook

We are not the only ones who have realized that Copilot as a "slightly better autocompleter" does not exploit its full potential, as it mainly accelerates pure typing work. In order to reduce the mental work involved in software development (approx. 90 % as described above), Github is currently testing Copilot Chat in a beta phase. The new features include a chat (similar to ChatGPT), the ability to find bugs in the code, suggest refactoring and much more. You can reference marked code snippets via chat and receive personalized answers in the current project context. One of the biggest advantages is that you don't have to search for answers in the browser yourself, but can do this directly in the development environment with AI support.

The future of tools like Copilot lies in fine-tuning their algorithms to improve the accuracy and relevance of suggestions. At the same time, teams need to learn how to integrate these tools more effectively into their workflows to maximize the benefits.

This experiment has given us the first valuable insights into the possibilities and limitations of Copilot in the version available at the time. It serves as a basis for further experimentation and discussion on how we can use technology to advance the art of software development.

Do you have any questions?

Simply fill in the form opposite or send us an e-mail to anfragen (at) techdivision.com or call us on +49 8031 22105-0.