In the meantime, the container technology Docker was on everyone's lips and every IT company that wanted to be "en vogue" used Docker in some form - even if it wasn't the optimal solution. Of course, we also worked intensively with Docker over a long period of time and used the tool in our projects.

Over time, however, we have increasingly moved away from it and have now completely abandoned Docker. However, this in no way means that we do not see or understand the advantages of container solutions - quite the opposite. In our day-to-day work with sometimes extremely demanding scenarios, we have discovered and come to appreciate LXC more and more.

A comparison of the two technologies

With the ever-increasing virtualization and the advance of distribution systems, container technologies are also being used more and more frequently. Containers are not a new technology. The Solaris platform has offered the concept of Solaris Zones for many years, and many Linux administrators have created a lightweight alternative to virtual machines with BSD jails. The growing interest in Docker and LXC (Linux Containers) has led to a resurgence of containers as a more user-friendly solution.

So how do you find the container solution that's better for you? This post is based on an article by Robinsystem and attempts to raise awareness of the pros and cons of both technologies. The following criteria are taken into account:

- Popularity

- Architecture

- Storage management

- Client tools and onboarding

- Image registration

- Application support

- Vendor support & ecosystem

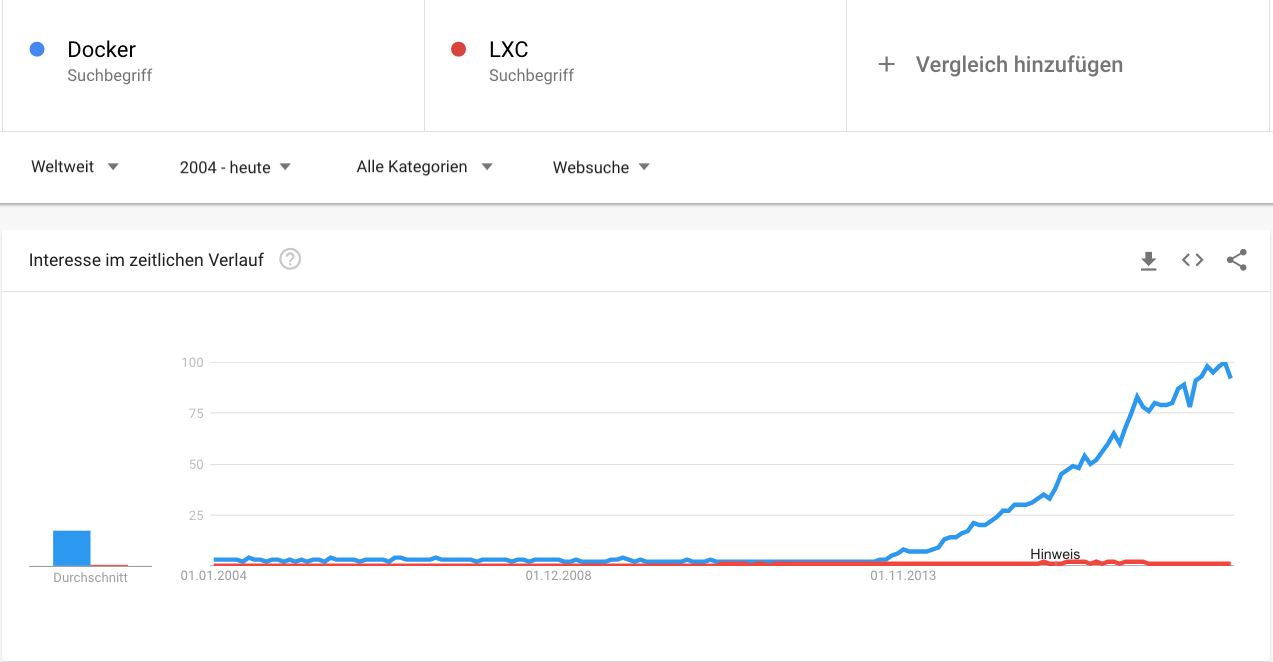

Popularity

If you've just woken up from a decade-long slumber and searched Google for the most popular technology trends of 2017, don't be surprised if you're flooded with websites talking about Docker. In fact, a comparison between Docker and LXC, in terms of Google search trends for both solutions, is very clear.

However, to be fair here, it should be noted that the first implementation of Docker was based on LXC, making Linux containers accessible to the masses. It tried to provide an easy VM experience for system administrators, just like Solaris Zones and BSD Jails. However, Docker's focus from the beginning was on the container benefits that would be made possible for the developer community, primarily on laptops and across all Linux distributions. To achieve this goal, Docker discontinued support for LXC as the default execution environment from version 0.9 and replaced it with its own implementation called libcontainer and finally the OCI specification-compliant runc. While there are still various other container alternatives such as rkt, OpenVZ, Cloud Foundry Garden, their use is rather limited. Docker has established a leading market position with an enormous distribution and a comprehensive ecosystem as well as advanced tools and facilities developed specifically for this solution.

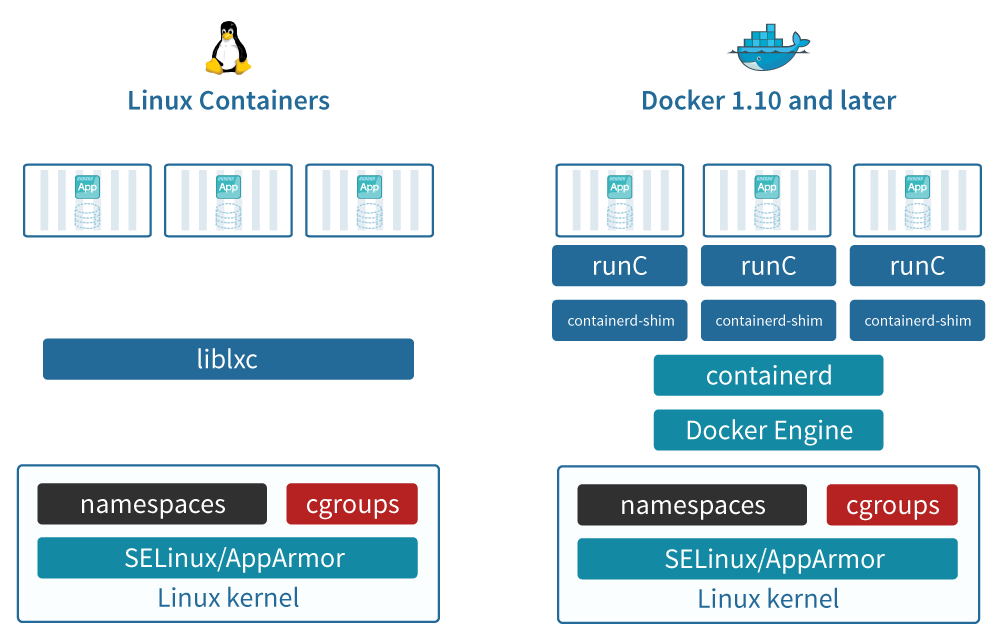

Architecture

At its core, Docker, LXC and other container technologies depend on the main Linux kernel features of cgroups and namespaces. At this point we would like to refer to a Presentation by Jérôme Petazzoni who explains more about these kernel features here.

In the beginning, the Docker architecture looked quite similar to LXC, as a custom library called libcontainer was implemented instead of liblxc, which provided the execution environment across multiple Linux distributions. However, over time, several abstraction layers were added to better suit the larger open source ecosystem and comply with industry standards. Currently, the two main Docker Engine components are: containerd and runC.

However, Docker is much more than an image format and a daemon. The complete Docker architecture consists of the following components:

- Docker daemon: runs on a host

- Client: connects to the daemon and is the primary user interface

- Images: read-only template for the creation of containers

- Container: executable instance of a Docker image

- Registration: private or public registration of Docker images

- Services: a scheduling service called Swarm that enables multi-host and multi-container deployment. Swarm was introduced in version 1.12.

Further information can be found in the Docker documentation.

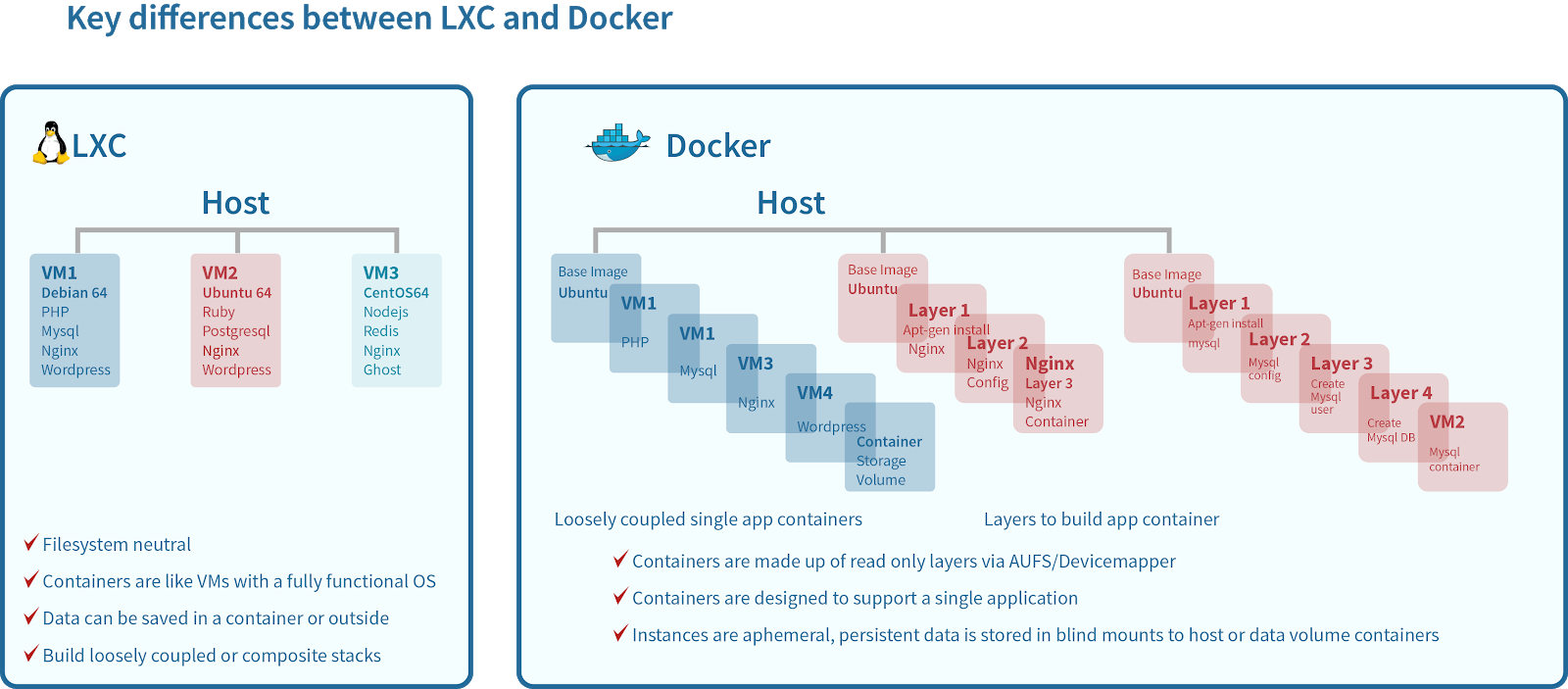

Storage management LXC vs. Docker

LXC storage management is quite simple. It supports a variety of storage backends such as btrfs, lvm, overlayfs and zfs. But by default (if no storage backend is defined), LXC simply stores the root file system under /var/lib/lxc/[container-name]/rootfs. For databases and other data-intensive applications, data can be loaded directly onto the rootfs or separate external shared storage volumes can be integrated for the data and rootfs. In this way, the functions of a SAN or NAS storage array can be used. To create an image from an LXC container, only the rootfs directory needs to be uploaded as tar.

On the other hand, Docker offers a more sophisticated solution for storing containers and managing images.

We start with the storage of an image. A Docker image refers to a list of read-only layers that represent differences in the file system. These layers are stacked on top of each other, as shown in the image above, and form the basis of the container root file system. The Docker Storage driver stacks and manages the different layers. It also manages the shared use of layers across images. This makes creating, moving and copying images fast and saves storage space.

When you create a container, each gets its own thin writable container layer, and all changes are stored in that container layer, meaning that multiple containers can access the same underlying image and still have their own data state.

Docker uses Copy-on-Write (CoW) technology by default with both images and containers. This CoW strategy optimizes both the use of image disk space and the performance of container startup times.

When a container is deleted, all stored data is lost. For databases and data-centric applications that require persistent storage, Docker makes it possible to integrate the host's file system directly into the container. This ensures that the data is retained even after the container has been deleted and can be distributed across multiple containers. Docker also ensures the transfer of data from external storage arrays and storage services such as AWS EBS via its Docker Volume Plug-ins.

Further information on Docker storage can be found in its Documentation.

Client tools and onboarding

As mentioned above, BSD jails and LXC have focused on system administrators who are looking for a lightweight virtualization solution. For a system administrator, the transition from hypervisor-based virtualization to LXC is a breeze. Everything remains the same, from the creation of container templates to the provision of containers, the configuration of the operating system, networking, the integration of storages, the provision of applications and so on. LXC offers direct SSH access, i.e. all scripts and automation workflows written for VMs and physical servers also apply to LXC containers. LXC also supports a type of template that is essentially a shell script that installs required packages and creates required configuration files.

Docker has primarily focused on the developer community. For this reason, it has provided customized solutions and tools for creating, versioning and distributing images, deploying and managing containers and packaged applications with all their dependencies to the respective image. The 3 most important Docker client tools are:

- Dockerfile: A text file that contains all the commands a user can invoke on the command line to create an image.

- Docker CLI: This is the primary interface for using all Docker functions.

- Docker Compose: A tool for defining and running multi-container Docker applications with a simple YAML file.

It's important to note that while Docker has increased ease of use with a number of custom tools, this leads to a steeper learning curve. If you are a developer, you are used to using VirtualBox, VMware Workstation/Player and Vagrant etc. to quickly create development environments. On the other hand, administrators have developed their own scripts and automation workflows for managing test and production environments. Both groups have become accustomed to this arrangement as the industry accepted norm is development environment does not equal production environment. Docker challenges this and seeks to get these two groups to use standard tools and technologies across the product pipeline. While developers find Docker intuitive and easy to use - primarily due to the increase in their productivity - IT administrators are still getting their heads around the idea in the first place. So they're trying to work in a world where containers and VMs coexist. The Docker learning curve for IT administrators remains steep as their existing scripts need to change. SSH access is not available by default and security considerations are new. Even with the new microservices architecture, they will still have their established processes associated with the typical traditional 3-tier applications.

Registration of images

One of the key components of the Docker architecture is the image registry, where you can store and deploy Docker images. Docker provides both a private image registry and a publicly hosted version of this registry called Docker Hub, which is accessible to all Docker users. Also, the Docker client is integrated directly into Docker Hub, so when you run `Docker run ubuntu` on your terminal, the daemon essentially fetches the required Docker image from the public registry. If you're just getting started with Docker, it's best to start with Docker Hub and familiarize yourself with the enormous number of container images that already exist.

Docker Hub was launched in March 2013, but according to Docker Inc. there were already more than 6 billion pulls of it in October 2016. In addition to Docker Hub, there are many other providers that offer API-compatible Docker registries, including Quay, AWS, JFrog, to name but a few.

LXC, on the other hand, has no special registries due to its relatively simple storage management, both in terms of the container file system and the images. Most vendors that support LXC usually provide their own mechanisms to store and deploy LXC images to various servers. The website Linuxcontainers.org provides a list of base images that have been created with the help of the community. Similar to Docker, LXC provides a download template that can be used to search for images from the above source and then dynamically create containers. The command looks like `sudo lxc-create -t download -n `.

Application support

The application area can be roughly divided into modern, microservice-based and traditional enterprise applications.

Microservices architecture has gained popularity with web companies such as Netflix, Google, Twitter, etc. Applications with a microservice architecture consist of a series of tightly focused, independently deployable services that can be expected to fail or malfunction. The advantage: increased agility and resilience. Agility, as individual services can be updated and redeployed in isolation. Due to the distributed nature of microservices, they can be deployed across different platforms and infrastructures, and developers are forced to think about resilience from the ground up.

Microservices architecture and containers together allow applications that are faster to build and easier to maintain - with higher overall quality. This makes Docker a perfect fit. Docker has aligned its container solution with the microservices philosophy and recommends that each container deals with a single concern. This means that applications can now consist of hundreds or even thousands of different containers.

Currently,microservice architecture is still relatively new and the applications based on it are limited. Much of the enterprise data center is dominated by the typical 3-tier applications - web, app and db. These applications are written in Java, Ruby, Python, etc., often use single application servers and databases, and require large CPU and memory allocations as a majority of the components communicate via in-memory function calls. Managing these applications requires administrators to shut down the application services, apply patches and upgrades, make configuration changes and then restart the services. All of this assumes that you have full control over the app and can change the state without losing access to the underlying infrastructure.

When looking at existing or traditional enterprise applications, LXC seems to be a logical addition. Sysadmins can easily convert their existing applications that are still running on physical servers or in VMs into LXC containers. While Docker is also promoting their technology for existing applications, this requires significant commitment and work to get such applications up and running. Of course, this will become easier and easier in the future as most vendors now provide their software as Docker images, which should make it easier to launch new applications.

If you are writing new applications from scratch, regardless of whether they are based on microservices or 3-tier architectures, Docker is often the better solution in the end. But if you want all the benefits of containers without significantly changing operations, then LXC will often be the better option.

Vendor, support & ecosystem

Both Docker and LXC are open source projects. Docker is supported by Docker Inc. while LXC & LXD (synchronized container hypervisor) are now supported by Canonical, the company behind Ubuntu OS. While Docker Inc. offers an enterprise distribution of the Docker solution called Docker DataCenter, there are many other vendors that also offer official distributions. In contrast, there are very few LXC vendors. Most of them support LXC as an additional container technology.

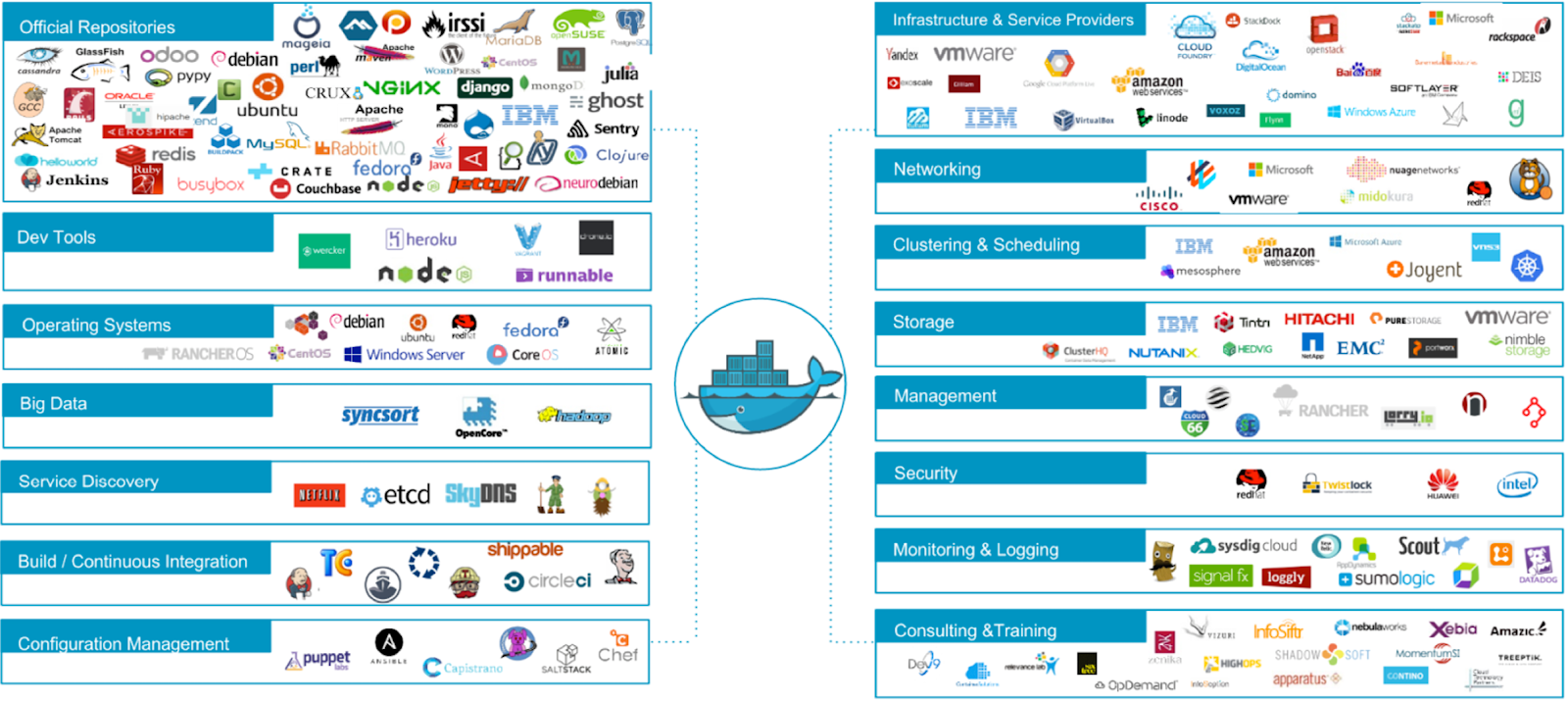

In contrast to VMs, the container topic is still quite young, the solutions are still immature and often still have functional gaps. This has led to a massive growth in the number of companies offering a wide range of container-related solutions, resulting in the emergence of an enormous ecosystem. The image below shows some of the partners supporting the Docker ecosystem. LXC does not have such a rich and dedicated ecosystem, but this is primarily due to its native VM-like experience. Most tools that work on VMs should naturally be compatible with LXC containers as well.

In terms of platform support, Docker has now also been ported to Windows. This means that all major cloud providers - AWS, Azure, Google and IBM - now offer native Docker support. This is a huge development for containers and shows the growing trend.

Summary

As with any blog post of this nature comparing two almost identical technologies, the answer to which is ultimately best is often the familiar "it depends" .... both Docker and LXC have enormous potential and offer the same advantages in terms of performance, consolidation, deployment speed, etc.. However, as Docker is much more focused on the developer community, its popularity has skyrocketed and continues to grow. On the other hand, LXC has seen limited adoption, but appears to be an extremely viable alternative for existing traditional applications. VM administrators would also find the transition to LXC easier than Docker, but need to support both container technologies.

Why we at TechDivision now rely on LXC and no longer find Docker so "exciting"

In our devs' local development environment, there were several reasons for reverting to a native toolset. On the one hand, the performance with Docker is still in great need of improvement, especially when developing Magento2 projects due to the virtualization via "xhyve", especially when it comes to linking many files from the host "macOS" across the virtualization into the corresponding Docker containers. Alternative solutions such as docker-sync or a tool developed by us to synchronize the files with the Docker containers during development bring a significant performance gain, but are more unstable and completely bypass Docker's own feature to link directories and files in containers. Provided updates of Docker for macOS did not stabilize the functionality as desired, but mostly presented us with new challenges. As our focus is always on performance and efficiency in the development phase of projects, we now rely on native development environments. We are currently using Valet+ for this purpose on a transitional basis. Valet+ is open source and available on GitHub (https://github.com/weprovide/valet-plus). It is a fork of Laravel Valet. It adds functionality to Valet with the aim of making things even easier and faster. We are very grateful to the Laravel team and the company We Provide for making the base and fork available. Our research and development department is currently working on our own standardized solution for native development environments on macOS, which will also completely replace Valet+ and the associated homebrew in the medium term.

On our Linux-based CI environments, Docker as a container solution was basically fine at the beginning, but did not bring the desired or hoped-for benefits in practice over time, as the local development systems cannot be mapped in the same form for the reasons mentioned above, making a homogeneous system landscape impossible during development. Particularly during the development of our new Build & Deployment Framework (BDF), we found LXC & LXD more appealing in many respects in terms of handling, performance, maintenance and automation. With a few small exceptions, we have now said goodbye to Docker completely and "released the whale back into the wild" .... ;).

Do you have any questions?

Simply fill in the form opposite or send us an e-mail to anfragen (at) techdivision.com or call us on +49 8031 22105-0.